Frequently asked questions

Should I vocalise my project?

After all, with character texts being subtitled by default in VTS, is it really necessary?

Generally speaking, we advise you to vocalise your modules, whether you choose to use a studio or synthesised voices.

This promotes immersion, comprehension and content memorization.

Vocalising also improves accessibility. As subtitles have a display time that depends on their length, a learner who didn’t have time to read all the texts might feel a little lost. Broadcasting will allow a complete transmission.

Will I use computer-generated voices or studio voices?

Various parameters can be taken into account when choosing between synthesised or studio voices. Here are some guidelines to help you make your decision:

– The budget: The synthetic voice remains the least expensive solution.

Be careful, however, about the quality of these voices and the appropriateness of their use, as a poorly voiced project can be counterproductive.

– The type of project: The sector of activity, the area of expertise targeted and the concept of your project may influence your choice. Very top-down and formal content spoken by characters expressing very little emotion can easily be vocalised in a computer-generated voice.

On the other hand, highly narrative and immersive modules, with colourful characters who have to express voice modulations or training projects in communication and more advanced interpersonal relations, will find it more difficult to do without studio-recorded voices. Texts performed by real studio actors will generate all the more emotions that are conducive to commitment and information retention.

– The target audience: Consider whether your audience can handle computer-generated voices. Can this choice have a negative impact on learning?

– The possible evolution of your project’s texts: If you think that your project’s texts will evolve and that the context is appropriate, the choice of synthesised voices can be interesting.

This may be the case if you want to quickly and cheaply change and re-vocalise the name of a product or a term that you no longer wish to use.

Once the texts to be replaced have been modified, all you have to do is generate the missing sounds without any difference in tone.

![]()

QA few words on computer-generated voices

Their quality has evolved considerably in recent years, particularly thanks to the Artificial Intelligence technologies developed by Google and Microsoft

VTS Editor offers a wide variety of synthesised voices via its 4 partners and allows you to generate quality vocalisations in different languages with one click.

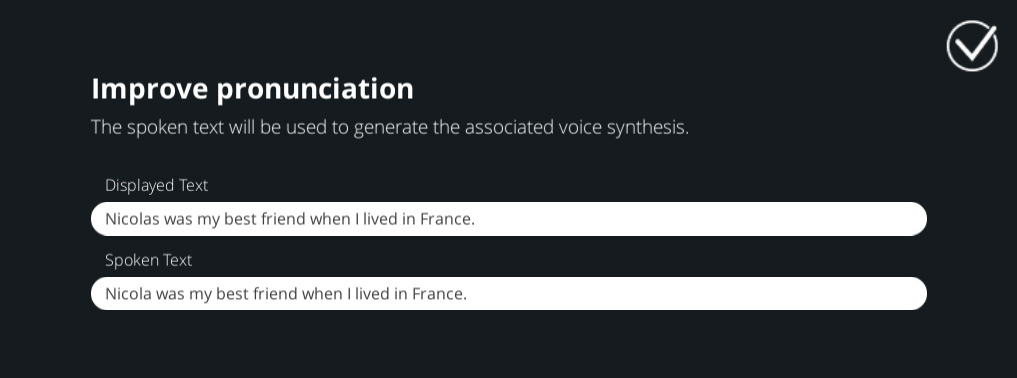

Pro tip: You can improve the pronunciation of the text read by the computer voice by changing the “spoken text” line in VTS. This can be done in two ways which can be combined:

1/ By writing the word as you want to hear it pronounced

Example: “Communication should be courteous.

Do you want the character to link the words “must be”? Simply write ‘must be in the ‘spoken text’ line as in the example below:

2/ By using the text-to-speech markup language (SSML) for Google voices (beginning with GW) or the tags proposed by Acapela (AC).

![]()

Do I have to voice all the characters?

The implicit question behind this one is often: “Should I vocalise the learner character if I am in first-person point of view?”

Some may prefer not to vocalise the learner’s voice when the scene is seen through the eyes of the person playing the module for various reasons:

This may be for budgetary reasons if the whole module is to be produced in-studio voice.

Another point is that it allows the learner to project themselves better in the first person and avoid the question that some clients ask: “Will users be able to get into character if the learner’s voice is voiced by a person of the opposite sex?”

We tend to think that identification with a character is not just about gender identity, but also about many more subtle factors.

One’s open-mindedness and video game culture may also come into play. Indeed, people who are used to playing a wide variety of roles and characters that are sometimes far removed from the way they represent themselves in video games do not ask themselves this question most of the time.

Vocalizing the learner’s voice will make your module more immersive and lively. You will avoid the feeling of not having a response when talking to the other characters.

If your module is short, you could also consider having your learners choose to play the role of a man or a woman.

Simply duplicate the learner’s role and replace it with two characters who never appear on the screen and then offer a choice of a female or male voice at the beginning of your project.

This operation can also be carried out on a larger module. However, we advise you to pay attention to the following points before you start:

1/ The vocalisation cost will be higher as you will have to generate the learner’s voice twice if you choose synthetic voice or have it recorded by two different actors.

2/ If changes are to be made afterwards, you will have to make duplicate changes to the text each time and make the gender agreements. This concerns the learner’s texts but also those of other characters addressing him/her or referring to him/her.

3/ Finally, this duplication of sound files will have a more or less significant impact on your module’s size.

If you choose this option, make sure to duplicate the texts once they have been definitively validated to avoid duplicate modifications at each stage of the project.

Can I mix studio voices and computer-generated voices?

You dreamed of having your module voiced by studio actors but your budget does not allow for the whole recording process?

In some cases, a mixed computer voice/studio voice solution can be chosen. However, make sure that the contrast between the two is not too disturbing for your learners.

If you need to vocalise all the text in your project, not only the characters but also message and quiz blocks for accessibility reasons, this can quickly add up to a lot of words.

In this case, it may be advisable to use speech synthesis only for reading instructions or any other text that does not require emotions or a particular phrasing.

Can I record the voices myself?

You can, of course, make these recordings yourself if you have acting skills and equipment with good audio quality. This may also be an option if you have a limited budget and the time and inclination to do it. However, it can be time-consuming if you are not already experienced in sound production techniques, or even counter-productive if your voice is not suited to the role you are playing or if you cannot find the right intention.

Tips for recording studio voices

Preparation of voice recordings

The preparation of voice recordings before sending them to the studio should not be neglected because of a lack of time or because you consider that directing actors is not your responsibility.

Your contribution at this stage is essential to facilitate the recording and to obtain a result that meets your expectations: each role will be played by a voice adapted to the character to allow the learner to be easily immersed by identification.

Moreover, a professional will guarantee you a quality rendering and will allow you to save a lot of time by delegating these different steps:

Appropriation of the texts and the acting of each character, recording and listening to the tracks, re-recording of unsatisfactory takes, cutting and naming of audio files.

Once you have received these sounds, you can integrate them very quickly into VTS Editor and proceed with the playback.

Final proofreading before recording

- Read the texts to be vocalised aloud. This will help you check the rhythm, and identify sentences that are too long or that “sound wrong”.

- Also, have the texts proofread by people with good spelling skills who were not involved in the design, to catch any remaining mistakes that may have escaped your attention. Even though we proofread carefully several times, it still happens that typos slip through because we are too familiar with the texts and no longer see certain details.

- We also advise you to have your draft proofread by people in your final target audience to ensure that all the words are understood by everyone. If not, a simpler synonym or further explanation may be necessary.

Ideally, this review will have been done earlier during user testing, but if not, this is the time to make the final changes! After registration, a change is more complicated. It is not impossible, of course, but it will delay your project and be more expensive because you will need the relevant actors again and process the new sound files.

How do we work with the recording studio?

At Serious Factory, we provide various elements to the studio so that they can draw up a quote and record the characters’ voices with as much useful information as possible:

For this purpose, we produce a summary sheet containing the essential information necessary. It is recommended that this sheet contains :

– The language of recording.

You can also specify the type of accent required if necessary. For example, do you need an American, British, Australian English accent…?

– The number of words spoken in total and by each character and the number of sound files that these texts represent. This will enable the studio to draw up an accurate quote based on the actors selected, the number of files to be cut and the specific requirements of your project.

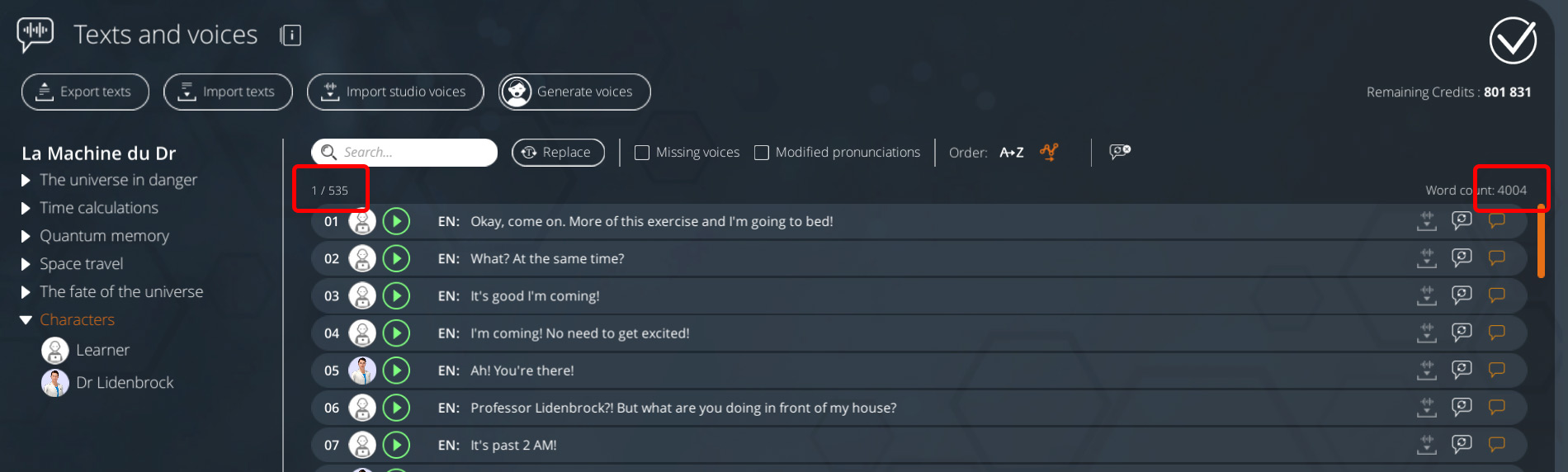

Pro tip: Thanks to VTS Editor, it is very easy to know the number of words and sound files used per character and for the whole cast by going to the text manager (click on “Texts & voices” in the menu):

In this example, we see that by clicking on “Characters” in the left-hand part of the screen, we can see the total number of sound files (top left of the texts): 535, and the number of words spoken by all the characters (top right): 4004

– A brief description of the context of the module and the general tone:

What type of module and format is it? Is it part of a blended learning course, is it a long or very short format such as a microlearning capsule, a digital escape game, etc.? Is the tone very didactic, with a formal or informal context?

– Some explanation of the nature of the target audience:

For example, are we dealing with learners who are new to onboarding or already experienced in the subject?

– A presentation of each character:

You should include their photo, approximate age and role in the script. You can give indications on the type of personality, voice, and general interpretation desired and use short audio or video extracts to specify your request. For large projects requiring a high level of precision, the studio can provide preliminary voice tests.

For example:

John, 55: Senior salesman for company X. He is friendly and quite joking, with a warm and assertive voice.

– Guidance on the character’s non-verbal behaviour can also help actors to portray the character to the best effect:

For example: “The character is injured and in a lot of pain. He is curled up and holding his shoulder.”

N.B.: If the acting required involves a certain degree of complexity, please ensure that your request is sent to the studio in good time to allow the actors sufficient time to get to grips with their roles.

Where possible, we temporarily share the voice-over experience in the studio, as well as the general export of the texts, so that the person directing the actors can fully grasp the context and best guide the actors.

Here is an example of a summary sheet that you can use to communicate with the studio

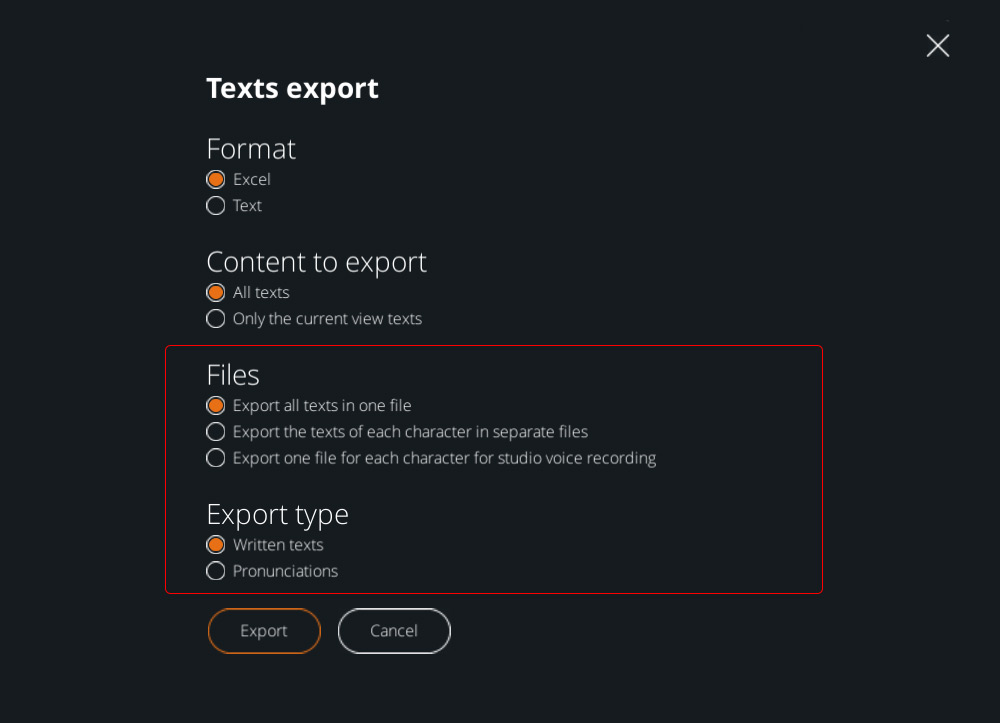

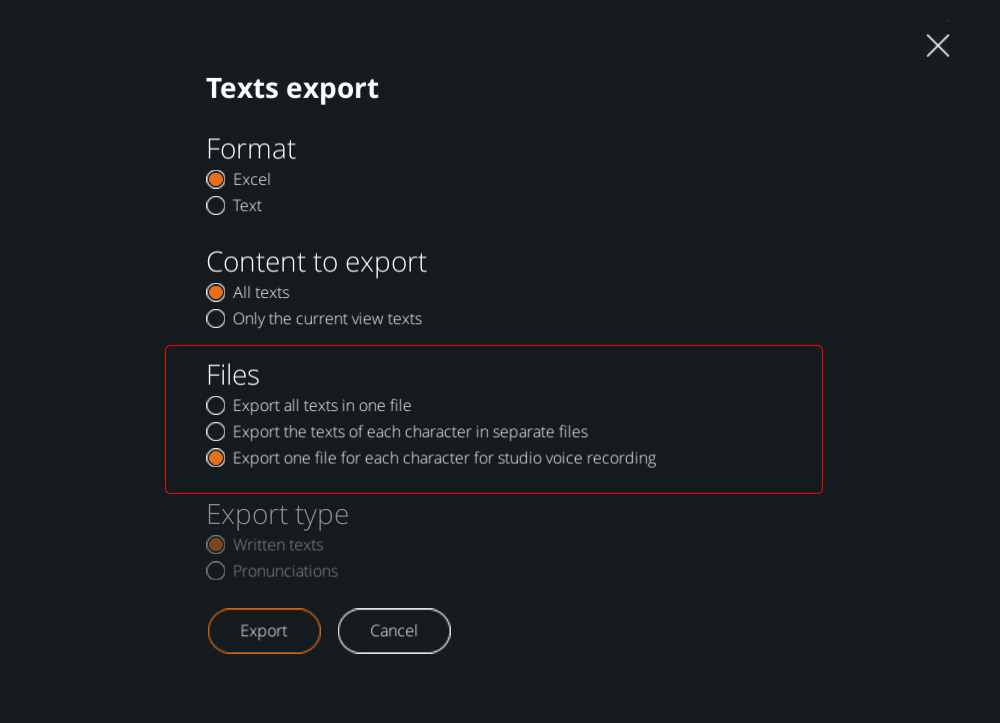

We then export the text files to be recorded for each of the characters.

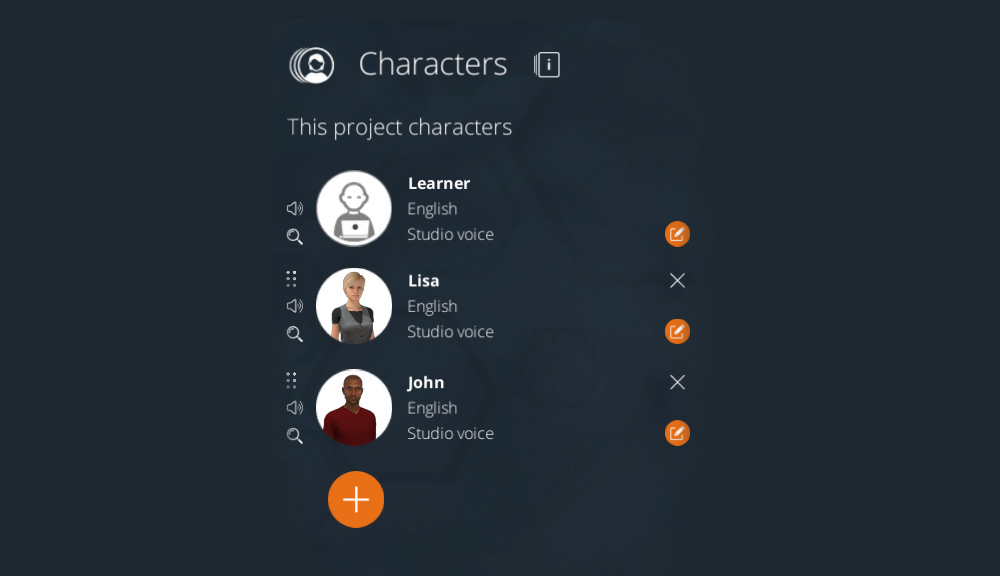

N.B.: If you are exporting the files yourself, remember to set the characters’ voices to “studio voice” in the VTS Editor casting for all those whose texts are to be voiced.

Once the general description of the module has been made via the summary sheet, we can go into the details of the texts by providing additional information on the phrases to be interpreted by the actor when necessary.

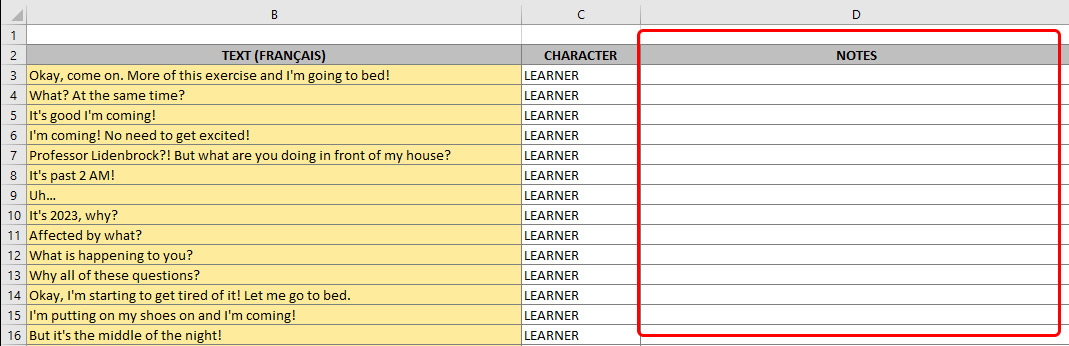

In order to provide all these details, use the “notes” column in the text extraction:

Here are some points to pay attention to:

– The pronunciation of specific terms:

Check that there are no terms on which there might be doubts about pronunciation. These may include :

- Acronyms: Indicate for each one if you want it to be pronounced as a word or letter by letter:

E.g. “CPAM”: “cepame” or “C-P-A-M” using dashes for pronunciation of each letter? - terms relating to a particular field of activity: specialised vocabulary of a technical or medical nature, etc

E.g.: LMWHs anti-Xa/anti-IIa activity ratio > 1.5: Xa is called “10 activated”; “anti Iia” is called anti 2 activated - the name of a product, a brand, a person :

For example: “Hyundai”: “H’youn-dé” (not “H’youn-daï”); “Taibi” to be pronounced “té-bi”, … - dforeign terms for which you prefer a pronunciation in the native language of the term or a French pronunciation, for example, if the recording is in French.

We have a good example in the film “City of Fear” with two characters who do not understand each other because of a different pronunciation of the English term “serial killer”.

So, for you, it will be “serial killer” or “seeuh-ree-uhl ki-luh” 😉

You can phonetically write a word with a pronunciation difficulty in square brackets or find any other clear system to guide the actor, for example by providing an audio file of the word as it should be pronounced or by using pronunciation analogies with other unequivocally known words.

– Intonation and rhythm: These can also help the effectiveness of your script by creating an atmosphere of suspense, confidence, unease, irony, etc. They generate emotions in your learners which will help anchor the knowledge or can, on the contrary, do harm if used incorrectly. They generate emotions in your learners which will help to anchor the knowledge or can, on the contrary, be detrimental to it if they are badly used.

If an extreme level of precision is required in terms of the rhythm and intonation of certain sentences, you can also, in some cases, make an audio recording to transcribe the intention to the actor (awareness of a professional environment, for example).

This is crucial in the communication or care sectors, and it remains important in all sectors since the module you record will be a communication tool.

Pauses can also be used to accompany the visual rhythm of the module and promote understanding or emphasise the importance of certain terms.

If the way the character is interpreted differs from the general description already given in the summary sheet and you feel it would be interesting to add contextual details to certain sentences, you can also specify this in this “notes” column: “the character takes an ironic tone”.

– Background voices

If this is the case, don’t forget to include them as additional texts (to be added to the word count) so that the studio can make these recordings.

We are not talking here about ambiences that you can easily find on specialised sound banks (crowd noise, etc.) but about background sounds that could be specific to your project because they include your characters or particular texts that need to be heard but that have not necessarily been written in the module and therefore will not appear in the automatic extraction of texts.

For example, we encountered this case during the recording of a serious game where an emergency situation had to be dealt with: the whole scene took place on the telephone, the learner could not see the caller and it was, therefore, all the more important to simulate a realistic sound environment to understand the urgency and dramatic tension encountered in such a situation. The studio was able to provide the sound of a violent man swearing and trying to break down the door of the room where his victim was hidden.

What to do after the studio recording?

Once the studio voices have been recorded, they will be easily reintegrated into VTS Editor (you can consult the “Importing voices” article in the VTS documentation for more information). the article “Importing voices” in the VTS documentation for more information).

You can then listen to them in the context in your final module and one by one, by character or by scene, using the VTS Editor text manager for in-depth reading.

You can first do an initial visualization in context and then a careful reading via the text manager to spot possible typos.

We hope you find these tips useful!

As each project is unique, you can also consult us for more in-depth expertise and react to this article to tell us how you organise yourself to vocalise your modules.

In addition, an actionable document to help you in your vocalisation process will soon be available on our VTStack resource and instructional design platform. In the meantime, we invite you to discover it and all its available resources here: